Data Mesh: An easier alternative to ETL

ETL/ELT are critical in our digital age

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are high priority initiatives within companies that understand the value of data. These companies recognize that delivering data into a Data Warehouse or Data Lake is critical to enabling BI (Business Intelligence) and ensuring teams can make informed business decisions based on sound analysis. Why is this analysis important? Because without it, companies make decisions on intuition, not data. And, as Nobel Peace Prize winner and author of Thinking Fast and Slow, Daniel Kahneman, tells us, humans are horrible at intuition.

BI tools allow you to visualize and analyze of all your organization’s collective data to perform tasks such as trend analysis and customer segmentation. There is incredibly value in doing so, because these abilities help a company identify customers’ willingness to pay via the ideal feature/item sets desired by those customers, messaging that resonates with each segment, and what each segment values in their customer decision journey.

But ETL/ELT processes are hard to setup and maintain

This sounds great in theory: a well of data with oracle-like wisdom providing insights into how to maximize your revenue. But how hard is this to achieve?

In essence, you need to:

- Develop substantial data pipelines to feed into your Data Warehouse or Data Lake, running huge infrastructure to essentially duplicate (at least) all your data,

- Incorporate data transformation at this stage to ensure that the data arriving at your data repository is normalized (for ETL users). ELT users will instead perform this task at the data repository level.

- Transfer all your data to the desired destinations.

- Keep your data readily available for any analysis test that your marketing or BI team wishes.

- Decide on the frequency that this data needs to be updated for users and implement either a batching or streaming process to ensure your warehouse or lake doesn’t become stale.

- Ensure your organization has the flexibility to be able to add new data sources, modify existing data sources or change the nature of the data you are piping after feedback from the data end-users.

This is a substantial amount of work and very difficult to get right. Challenges are compounded in tight corporate sprints where wandering from pre-determined project management constraints equates to sin.

Doing this in-house is complicated and time consuming, involving building all the APIs and logic to either batch process or live stream all your data from every single software source you have that *could* potentially add value to a customer picture. Needless to say, the scope creep here can be endless. Stories about 2 weeks being allocated to creating an ETL pipeline with 3 developers and a data scientist turning into a 30 week project without a clean, finalized pipeline are common. So, what’s the alternative?

ETL/ELT providers can help, but the fundamentals of data duplication persist

As we have detailed throughout our site, ETL providers can help. These providers offer wonderful shortcuts and each have dedicated a lot of time to making this process as plug-and-play as possible. But the problem still remains that mass data duplication is occurring and you need to start paying substantial fees for databases to house all of this ‘enriched’ data, plus the subscription and usage fees to ETL/ELT providers themselves. AWS has recognized this booming trend and are capitalizing on it, proposing a mixture of Redshift, S3 and Spectrum to keep costs as economical as possible via enabling a 90 day trailing time-series queries across your Redshift data, while you put everything else on ice in S3 for a lower cost. Yet this is a constant stream of data that is only going to increase over time, hence so are your costs.

But… is all this effort needed in the first place? What are we trying to do anyway? If your objectives are simply to achieve a unified data picture and enable teams to pull certain data through appropriate privileges, there may now be a much simpler and cost effective way.

A simpler and cheaper way for team data availability

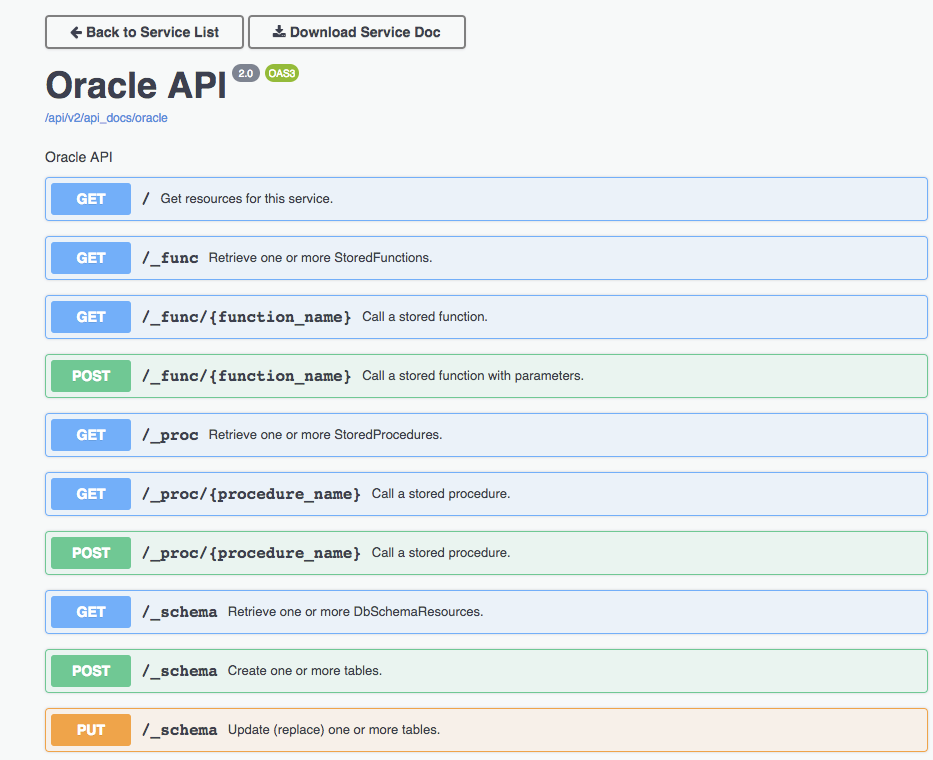

We’ve started to see a crowd split from pursuing ETL in favor of what they are starting to dub. ‘Anti-ETL’. This alternative embraces a Data Mart approach involving API gateways. What does this exactly mean?

Put simply, by creating high quality, standardized APIs, companies are pursuing a Data Mart approach through enabling their end-user departments to retrieve the exact data they need, when they need it. Like the software version of Just-In-Time delivery, and fitting with the image of a shopping (data) mart being conveniently available no matter where you sit in an organization.

But orchestrating the needed data through APIs is typically difficult, especially as so many APIs have been built in an overly complex and non-flexible way. Plus there are governance hurdles - who in what teams can access what types of data? Gartner and The Forrester Report are very clear on the importance and difficulty of APIs in numerous research publications - going so far as suggesting they are both the biggest bottleneck in IT and need to be the cornerstone of an effective digital strategy. So why complicate APIs even more by making them available to all of your teams?

Because it is getting easier and easier to get great, standardized, reusable and governance-smart APIs. In fact, DreamFactory has developed an API Framework to enable best practice REST APIs (and soon GraphQL APIs) to be created instantly, and allow you to quickly build the business logic with minimal code for an API gateway approach. Presented with these solutions, developers often counter with their ability to “ quickly hand-code” APIs. While true, these manually created APIs are rarely commercially feasible from the beginning. DreamFactory can generate fully documented, standardized, reusable, and secure APIs that plug into a common control plane with seamless governance features. While there are some API gateway tools that forego this API creation ability, our conclusion is these generation capabilities are a requirement due to the variance in quality and usability we see from API to API.

In their recent blog post, DreamFactory outlines the steps needed to use their Data Mesh feature to enable an ETL alternative with significantly less setup and maintenance effort than traditional ETL. The process involves using the DreamFactory API platform to rapidly create a number of REST APIs (without any code) for all your data sources, or simply plug into existing REST APIs, before pulling data from all disparate data sources with a single API call. The Data Mesh feature allows you to combine this data effortlessly, and the scripting engine with your choice of Python, V8JS, JavaScript and PHP allows you achieve the data transformation with remarkably little code.

Does DreamFactory’s Data Mesh alternative replace heavy load ETL? No. But it does provide a far simpler and less costly alternative to many of the ETL needs that are seen today: primarily getting the right data to end user teams when they need it, while foregoing a never ending process of data duplication and undulating project management terrain.

Data Warehouse Info

Recently added reviews

Try Xplenty

The ELT Tool Built for the Cloud

The ELT Tool Built for the Cloud

Unlimited Connectors | Unlimited Pipelines | 14 Day Free Trial

no thanks